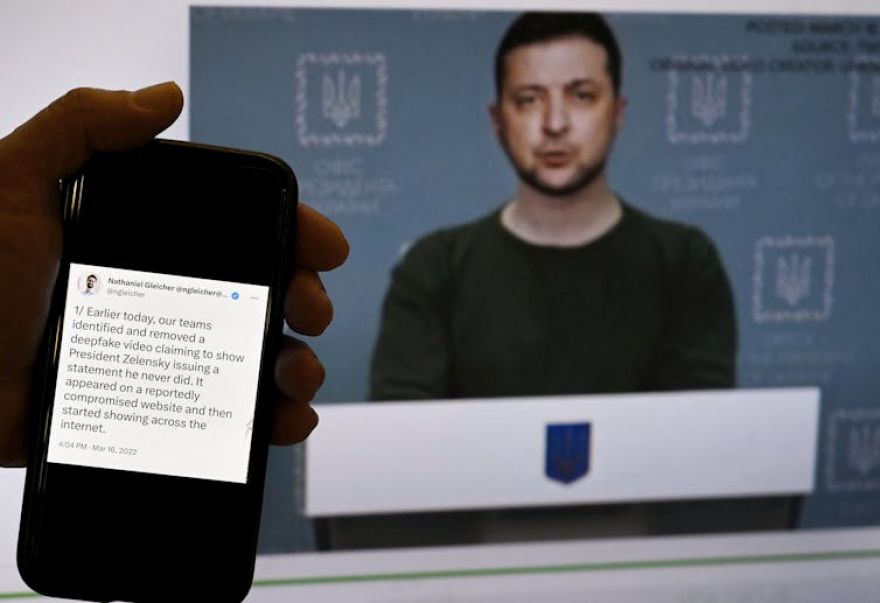

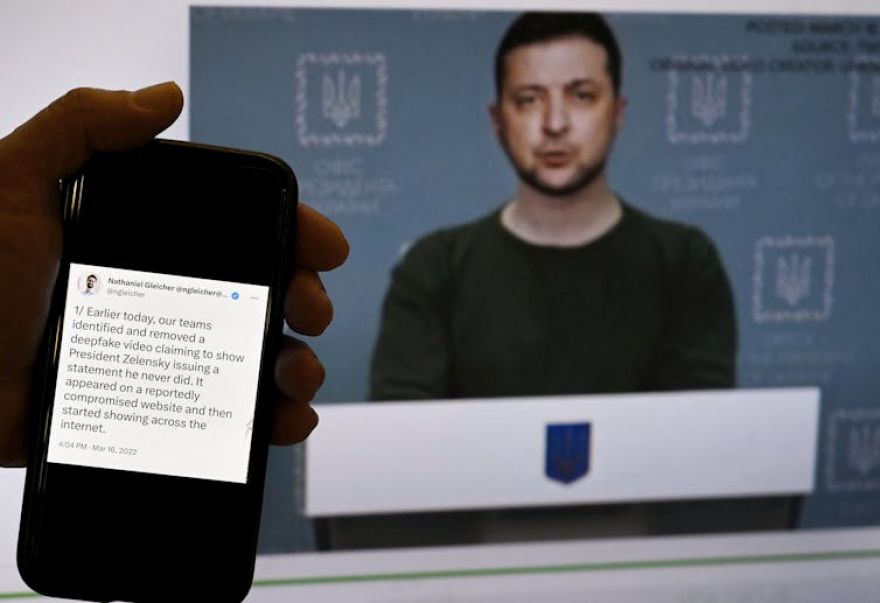

Deepfakes – essentially putting words in someone else’s mouth in a very believable way – are becoming more sophisticated by the day and increasingly hard to spot. Recent examples of deepfakes include Taylor Swift nude images, an audio recording of President Joe Biden telling New Hampshire residents not to vote, and a video of Ukrainian President Volodymyr Zelenskyy calling on his troops to lay down their arms. Although companies have created detectors to help spot deepfakes, studies have found that biases in the data used to train these tools can lead to certain demographic groups being unfairly targeted.

In 1981, American physicist and Nobel Laureate, Richard Feynman, gave a lecture at the Massachusetts Institute of Technology (MIT) near Boston, in which he outlined a revolutionary idea. Feynman suggested that the strange physics of quantum mechanics could be used to perform calculations. The field of quantum computing was born. In the 40-plus years since, it has become an intensive area of research in computer science. Despite years of frantic development, physicists have not yet built practical quantum computers that are well suited for everyday use and normal conditions (for example, many quantum computers operate at very low temperatures).

Our growing reliance on technology at home and in the workplace has raised the profile of e-waste. This consists of discarded electrical devices including laptops, smartphones, televisions, computer servers, washing machines, medical equipment, games consoles and much more. The amount of e-waste produced this decade could reach as much as 5 million metric tonnes, according to recent research published in Nature. This is around 1,000 times more e-waste than was produced in 2023. According to the study, the boom in artificial intelligence will significantly contribute to this e-waste problem, because AI requires lots of computing power and storage.

Artificial intelligence (AI) makes important decisions that affect our everyday lives. These decisions are implemented by firms and institutions in the name of efficiency. They can help determine who gets into college, who lands a job, who receives medical treatment and who qualifies for government assistance. As AI takes on these roles, there is a growing risk of unfair decisions – or the perception of them by those people affected. For example, in college admissions or hiring, these automated decisions can unintentionally favour certain groups of people or those with certain backgrounds, while equally qualified but underrepresented applicants get overlooked.

Businesses are already being radically transformed by artificial intelligence (AI). Tools now exist that offer instantaneous, high-quality results in improving certain operations without the burden of high costs or delays. In fact, generative AI could completely upend the traditional ways that we measure success in business. Generative AI refers to programs that produce high-quality text, images, ideas and even complex software code in response to prompts (questions or instructions) from a user. Applications powered by data-driven algorithms enable users to quickly create high-quality content, redefining traditional measures of success. A small café can generate aesthetically pleasing menus in a few clicks through apps like Jasper.AI.

The European Space Agency has given the go-ahead for initial work on a mission to visit an asteroid called (99942) Apophis. If approved at a key meeting next year, the robotic spacecraft, known as the Rapid Apophis Mission for Space Safety (Ramses), will rendezvous with the asteroid in February 2029. Apophis is 340 metres wide, about the same as the height of the Empire State Building. If it were to hit Earth, it would cause wholesale destruction hundreds of miles from its impact site. The energy released would equal that of tens or hundreds of nuclear weapons, depending on the yield of the device.

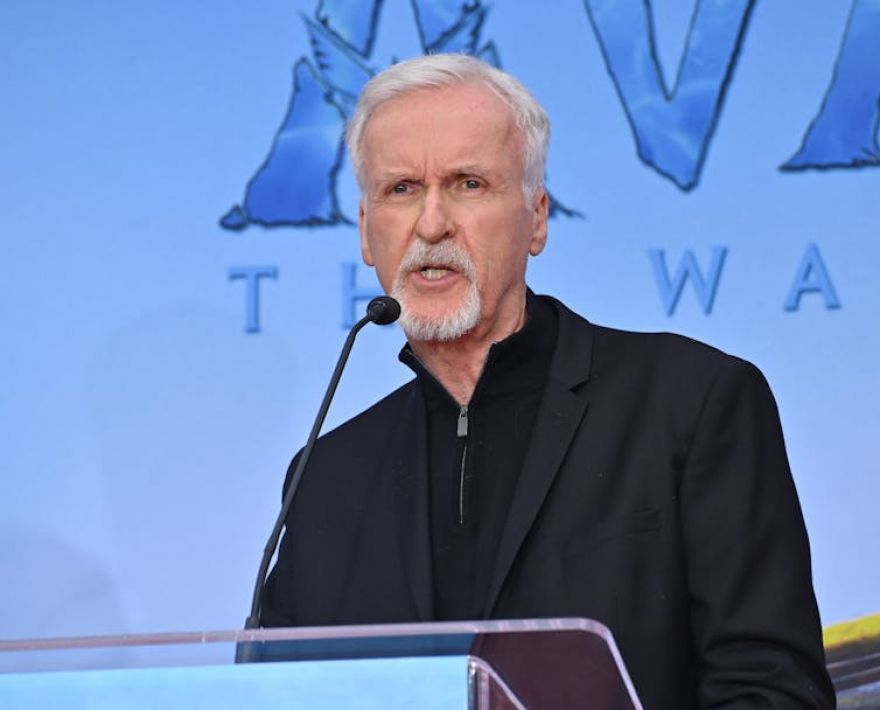

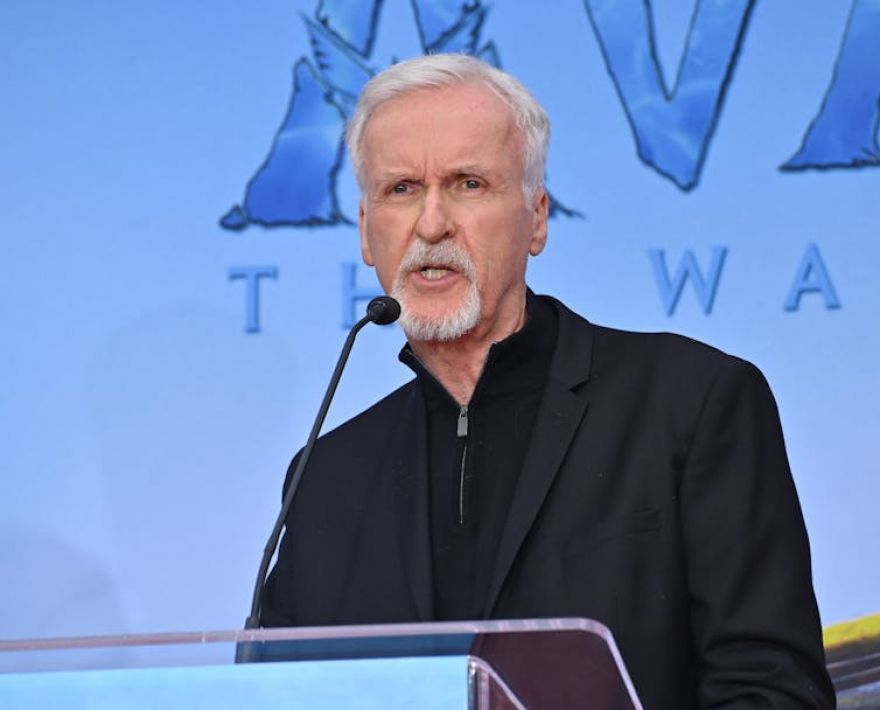

While many people in the creative industries are worrying that AI is about to steal their jobs, Oscar-winning film director James Cameron is embracing the technology. Cameron is famous for making the Avatar and Terminator movies, as well as Titanic. Now he has joined the board of Stability.AI, a leading player in the world of Generative AI. In Cameron’s Terminator films, Skynet is an artificial general intelligence that has become self-aware and is determined to destroy the humans who are trying to deactivate it. Forty years after the first of those movies, its director appears to be changing sides and allying himself with AI.

OpenAI, the company that made ChatGPT, has launched a new artificial intelligence (AI) system called Strawberry. It is designed not just to provide quick responses to questions, like ChatGPT, but to think or “reason”. This raises several major concerns. If Strawberry really is capable of some form of reasoning, could this AI system cheat and deceive humans? OpenAI can program the AI in ways that mitigate its ability to manipulate humans. But the company’s own evaluations rate it as a “medium risk” for its ability to assist experts in the “operational planning of reproducing a known biological threat” – in other words, a biological weapon.

As is their tradition at this time of year, Apple announced a new line of iPhones last week. The promised centrepiece that would make us want to buy these new devices was AI – or Apple Intelligence, as they branded it. Yet the reaction from the collective world of consumer technology has been muted. The lack of enthusiasm from consumers was so evident it immediately wiped over a hundred billion dollars off Apple’s share price. Even the Wired Gadget Lab podcast, enthusiasts of all new things tech, found nothing in the new capabilities that would make them want to upgrade to the iPhone 16.