Scientists develop a robot capable of listening when told it’s wrong, in real time

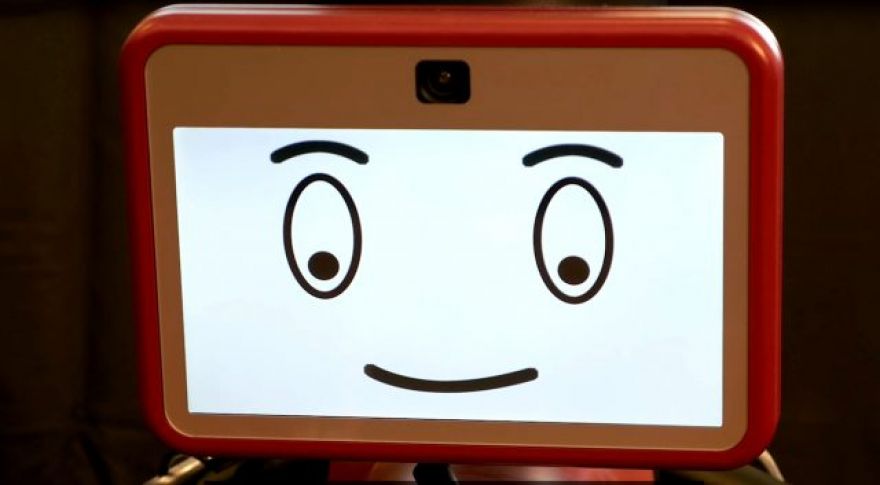

Baxter is a robot developed in 2012 by Rethink Robotics, the project of former CSAIL director Rodney Brooks. Now a research team from Boston University and MIT’s CSAIL have successfully enabled Baxter to interpret and obey brain waves in real time. It’s a step toward smoother control of, and coexistence with, robots: being able to remotely take action, by simply thinking “no.”

Their setup gave Baxter a simple sorting task and a human judge wearing an EEG cap. Baxter was tasked with sorting things into two bins correctly. When it made a mistake, the human judge was asked to “mentally disagree” with it.

“Imagine being able to instantaneously tell a robot to do a certain action, without needing to type a command, push a button or even say a word,” CSAIL Director Daniela Rus. “A streamlined approach like that would improve our abilities to supervise factory robots, driverless cars, and other technologies we haven’t even invented yet.”

In fairness, I have to agree: my mental “no!” reaction happens a lot faster than I can lunge for a button, even a really important button. ErrPs are faint but distinct signals, and they get “louder” as we register an error with greater emphasis. Baxter was able to use ErrPs to successfully catch himself in mid-sort, and correct his incorrect guess, in real time. As the robot indicates which bin it means to sort a thing into, the system uses ErrPs to see whether the human judge agrees with Baxter’s decision.

“As you watch the robot, all you have to do is mentally agree or disagree with what it is doing,” said Rus. “You don’t have to train yourself to think in a certain way — the machine adapts to you, and not the other way around.”

Beyond the applications for the manufacturing and industrial complex, though, this development could see use establishing fluid and meaningful communication between locked-in patients (and other such potentially communication-starved folks) and their loved ones and caregivers. MIT recently participated in another experiment with . The participants were four totally locked-in ALS patients. One source of uncertainty in the study’s results is that the locked-in patients’ answers didn’t always make sense in the context of the question. How valuable could this ability to object to the robot’s mistake be, in terms of correcting for errors in communication in such sensitive situations?