Nvidia Opens Drive to All, Unveils SoC for Self-Driving Cars, TensorRT 7

Nvidia’s Jensen Huang announced some exciting autonomous vehicle news at the company’s GTC keynote. First, the company is making its pre-trained Drive models publicly available for research and development. Along with that comes a set of transfer learning tools to make the models customizable and deployable. Second, a new family of automotive SoCs called ORIN is on the way. Also in the keynote, Huang unveiled version 7 of TensorRT, with dramatically improved support for conversational AI.

Nvidia Drive Models Now Available to All

Nvidia has spent a tremendous amount of time and money creating and training a wide variety of models for use in creating automated driving systems.

Now Nvidia is making all of them publicly available through its Nvidia GPU Cloud (GPC). While it is exciting enough that the models are now something anyone with a compute budget can get access to, it’s even more interesting that Nvidia is also providing a set of transfer learning and deployment tools. This lets users download the models and customize them by doing further training on their own data. The resulting models could be uploaded and run in the GPC, run on local GPUs, or used with Nvidia’s tools to deploy them on Nvidia SoCs like Xavier or the new Orin SoC.

Nvidia’s TensorRT inferencing software allows high-performance deployment of models developed in a wide variety of popular toolkits

Federated training is also supported, so, for example, global car companies could be actively training in multiple geographies to create an overall global model.

New Drive AGX Orin Auto SoC Sports 17 Billion Transistors

If there is one thing that most of those working on developing autonomous vehicles agree on, it is that they need all the processing power they can get. Most current test vehicles rely on multiple high-power CPUs and GPUs. For widespread adoption, vehicles will have to run on less-expensive, less-power-hungry, but even more powerful processors.

To help meet those needs Nvidia announced the Orin family of automotive SoCs. Packed with 17 billion transistors, and developed over the last four years, the Orin will be capable of over 200 trillion operations per second (TOPS), about seven times the performance of the current Xavier SoC. It includes ARM Hercules CPU cores and Nvidia’s next-generation GPU architecture. Designed for both vehicles and robots, Orin is touted to be designed with safety standards, like ISO 26262 ASIL-D, in mind.

Nvidia claims it will be useful from Level 2 solutions all the way up to Level 5. The cost will play a factor in how widely it gets used in Level 2 products, while the jury is still out on how much horsepower is needed for Level 5, so I’d mark that claim as speculation. The good news for developers is that Orin runs the same software stack as Xavier, so current projects that use Nvidia’s tools like CUDA and TensorRT should move over easily. The Orin family will include a range of configurations, but as of press time, Nvidia has not released any specific availability dates or prices.

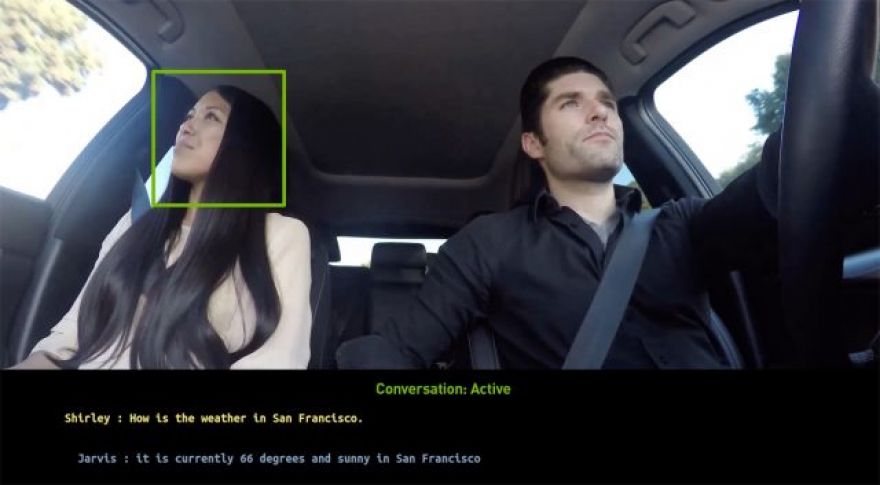

New TensorRT 7 Allows Real-Time Conversational AI

While object and facial recognition used to be the most common applications for AI “at the edge,” voice-recognition and voice-processing have quickly caught up. According to Jupiter Research, it is estimated that there are now over 3 billion devices that include digital voice assistants worldwide. Not all of them include fancy voice recognition and conversational features. Until recently, though, the ones that do — typically by using advanced language models like BERT — have required powerful back-end processors. Along with the additional processor and bandwidth demands, that automatically introduces some additional processing delays.

With TensorRT 7, Nvidia has announced that it is adding support for both Recurrent Neural Networks (RNNs) and quite a few popular conversational AI models that build on RNN features, including BERT, to its edge-friendly inferencing runtime. That means it will be possible to deploy conversational AI in a wide variety of embedded devices without requiring cloud connectivity or processing. Nvidia claims performance levels for TensorRT 7 that will support turnaround times of less than 300ms for a speech-recognition, natural-language-understanding, and text-to-speech generation pipeline.

Nvidia Continues to Make AI Inroads in China

Along with its new product news, Nvidia announced some significant new design wins in China. Building on its automotive strength, Didi — China’s largest ride-sharing firm — announced it will be using Nvidia technology for both the development and deployment of its self-driving cars.

Baidu, China’s largest search engine, announced that it is using Nvidia V100 GPUs in its recommendation engine, allowing it to achieve a 10x speedup in network training over its previous technology. Alibaba is also now powering user searches of its two billion product catalog with Nvidia T4 GPUs. This allows its 500 million daily users to get faster and more accurate search results. Alibaba credits that performance improvement with an impressive 10 percent gain in Click-through Rate (CTR).

Now read: