How to protect your AI systems against adversarial machine learning

With machine learning becoming increasingly popular, one thing that has been worrying experts is the security threats the technology will entail. We are still exploring the possibilities: The breakdown of autonomous driving systems? Inconspicuous theft of sensitive data from deep neural networks? Failure of deep learning–based biometric authentication? Subtle bypass of content moderation algorithms?

Meanwhile, machine learning algorithms have already found their way into critical fields such as finance, health care, and transportation, where security failures can have severe repercussion.

Parallel to the increased adoption of machine learning algorithms in different domains, there has been growing interest in adversarial machine learning, the field of research that explores ways learning algorithms can be compromised.

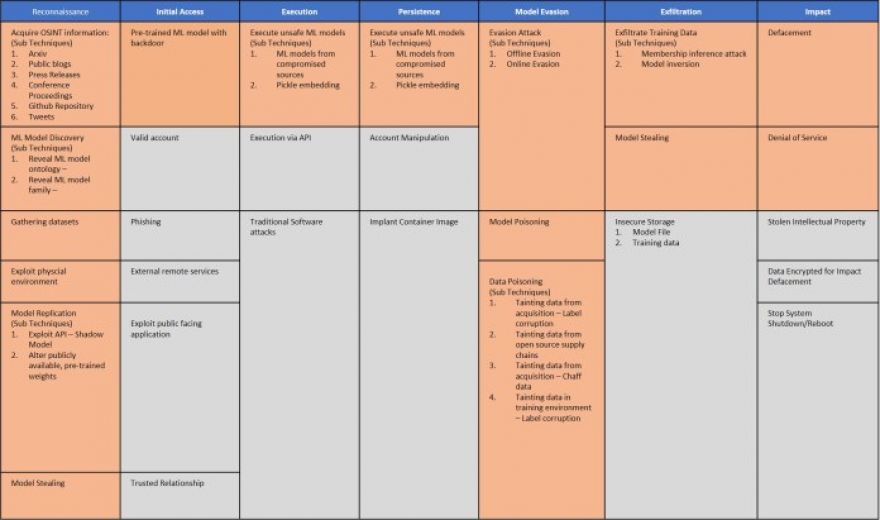

And now, we finally have a framework to detect and respond to adversarial attacks against machine learning systems. Called the Adversarial ML Threat Matrix, the framework is the result of a joint effort between AI researchers at 13 organizations, including Microsoft, IBM, Nvidia, and MITRE.

While still in early stages, the ML Threat Matrix provides a consolidated view of how malicious actors can take advantage of weaknesses in machine learning algorithms to target organizations that use them. And its key message is that the threat of adversarial machine learning is real and organizations should act now to secure their AI systems.

Applying ATT&CK to machine learning

The Adversarial ML Threat Matrix is presented in the style of ATT&CK, a tried-and-tested framework developed by MITRE to deal with cyber-threats in enterprise networks. ATT&CK provides a table that summarizes different adversarial tactics and the types of techniques that threat actors perform in each area.

Since its inception, ATT&CK has become a popular guide for cybersecurity experts and threat analysts to find weaknesses and speculate on possible attacks. The ATT&CK format of the Adversarial ML Threat Matrix makes it easier for security analysts to understand the threats of machine learning systems. It is also an accessible document for machine learning engineers who might not be deeply acquainted with cybersecurity operations.

“Many industries are undergoing digital transformation and will likely adopt machine learning technology as part of service/product offerings, including making high-stakes decisions,” Pin-Yu Chen, AI researcher at IBM, told TechTalks in written comments. “The notion of ‘system’ has evolved and become more complicated with the adoption of machine learning and deep learning.”

For instance, Chen says, an automated financial loan application recommendation can change from a transparent rule-based system to a black-box neural network-oriented system, which could have considerable implications on how the system can be attacked and secured.

“The adversarial threat matrix analysis (i.e., the study) bridges the gap by offering a holistic view of security in emerging ML-based systems, as well as illustrating their causes from traditional means and new risks induce by ML,” Chen says.

The Adversarial ML Threat Matrix combines known and documented tactics and techniques used in attacking digital infrastructure with methods that are unique to machine learning systems. Like the original ATT&CK table, each column represents one tactic (or area of activity) such as reconnaissance or model evasion, and each cell represents a specific technique.

For instance, to attack a machine learning system, a malicious actor must first gather information about the underlying model (reconnaissance column). This can be done through the gathering of open-source information (arXiv papers, GitHub repositories, press releases, etc.) or through experimentation with the application programming interface that exposes the model.

The complexity of machine learning security

Each new type of technology comes with its unique security and privacy implications. For instance, the advent of web applications with database backends introduced the concept SQL injection. Browser scripting languages such as JavaScript ushered in cross-site scripting attacks. The internet of things (IoT) introduced new ways to create botnets and conduct distributed denial of service (DDoS) attacks. Smartphones and mobile apps create new attack vectors for malicious actors and spying agencies.

The security landscape has evolved and continues to develop to address each of these threats. We have anti-malware software, web application firewalls, intrusion detection and prevention systems, DDoS protection solutions, and many more tools to fend off these threats.

For instance, security tools can scan binary executables for the digital fingerprints of malicious payloads, and static analysis can find vulnerabilities in software code. Many platforms such as GitHub and Google App Store already have integrated many of these tools and do a good job at finding security holes in the software they house.

But in adversarial attacks, malicious behavior and vulnerabilities are deeply embedded in the thousands and millions of parameters of deep neural networks, which is both hard to find and beyond the capabilities of current security tools.

“Traditional software security usually does not involve the machine learning component because it’s a new piece in the growing system,” Chen says, adding that adopting machine learning into the security landscape gives new insights and risk assessment.

The Adversarial ML Threat Matrix comes with a set of case studies of attacks that involve traditional security vulnerabilities, adversarial machine learning, and combinations of both. What’s important is that contrary to the popular belief that adversarial attacks are limited to lab environments, the case studies show that production machine learning system can and have been compromised with adversarial attacks.

For instance, in one case study, the security team at Microsoft Azure used open-source data to gather information about a target machine learning model. They then used a valid account in the server to obtain the machine learning model and its training data. They used this information to find adversarial vulnerabilities in the model and develop attacks against the API that exposed its functionality to the public.

Other case studies show how attackers can compromise various aspect of the machine learning pipeline and the software stack to conduct data poisoning attacks, bypass spam detectors, or force AI systems to reveal confidential information.

The matrix and these case studies can guide analysts in finding weak spots in their software and can guide security tool vendors in creating new tools to protect machine learning systems.

“Inspecting a single dimension (machine learning vs traditional software security) only provides an incomplete security analysis of the system as a whole,” Chen says. “Like the old saying goes: security is only as strong as its weakest link.”

Machine learning developers need to pay attention to adversarial threats

Unfortunately, developers and adopters of machine learning algorithms are not taking the necessary measures to make their models robust against adversarial attacks.

“The current development pipeline is merely ensuring a model trained on a training set can generalize well to a test set, while neglecting the fact that the model is often overconfident about the unseen (out-of-distribution) data or maliciously embbed Trojan patten in the training set, which offers unintended avenues to evasion attacks and backdoor attacks that an adversary can leverage to control or misguide the deployed model,” Chen says. “In my view, similar to car model development and manufacturing, a comprehensive ‘in-house collision test’ for different adversarial treats on an AI model should be the new norm to practice to better understand and mitigate potential security risks.”

In his work at IBM Research, Chen has helped develop various methods to detect and patch adversarial vulnerabilities in machine learning models. With the advent Adversarial ML Threat Matrix, the efforts of Chen and other AI and security researchers will put developers in a better position to create secure and robust machine learning systems.

“My hope is that with this study, the model developers and machine learning researchers can pay more attention to the security (robustness) aspect of the model and looking beyond a single performance metric such as accuracy,” Chen says.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Published November 6, 2020 — 11:00 UTC